AI-Assisted Coding in Enterprise: The Technical Leader’s Implementation Guide

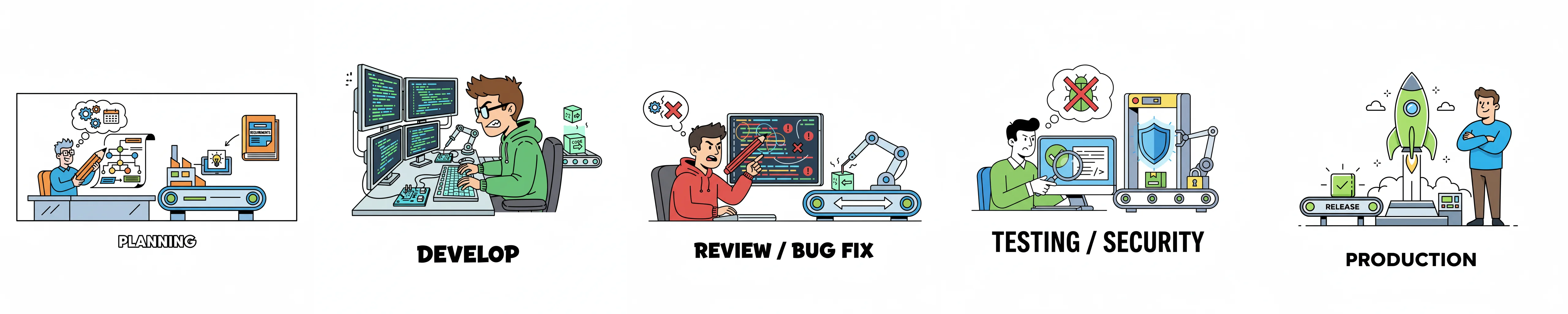

This comprehensive research report synthesizes the current state of AI-assisted development practices (2023-2025) for technical leaders managing large-scale software development teams. The central finding: AI coding tools like GitHub Copilot now generate 25-46% of code at major tech companies, but success requires systematic integration of quality assurance, security practices, and lightweight governance—not just tool deployment. Organizations that treat this as an organizational transformation achieve 10-30% productivity gains; those treating it as plug-and-play struggle with quality, security, and adoption.

How developers are actually using AI tools today

The AI coding landscape has matured from experimental autocomplete to production-critical workflows. Three distinct categories have emerged, each requiring different integration strategies: chat-based coding for architecture and learning, IDE-integrated “vibe coding” for daily development flow, and agentic systems for autonomous task completion.

Chat-based tools (ChatGPT, Claude) serve as external coding mentors. Claude dominates code-specific tasks with its 200K+ token context window—42% market share among enterprises—while ChatGPT leads general-purpose usage. Developers use these for architectural discussions, complex debugging across large codebases, and learning new frameworks. The most effective practitioners follow structured prompting: forcing AI to ask clarifying questions before solutions, executing step-by-step with approval gates, and providing rich context including error messages, framework versions, and API constraints. Model selection matters by phase: advanced reasoning models (Claude 3.7, GPT-4o) for planning, efficient models for bulk generation, focused models for scoped fixes.

IDE-integrated tools represent the productivity core. GitHub Copilot leads with 1.5M+ users generating up to 46% of code in enabled files, but newer entrants challenge different niches. Cursor (AI-native IDE) excels at project-wide generation with its Composer mode and codebase-aware queries, preferred for complex projects requiring architectural understanding. Windsurf (Codeium) emphasizes agentic workflows through Cascade technology with multi-agent orchestration, offering the fastest simple experiences. Cline (open-source VS Code extension) provides privacy-first development with human approval for every change, supporting multiple LLMs without data leaving the environment. Each shows 72-86% developer satisfaction when properly implemented, with acceptance rates of 25-35% considered healthy. The “80/20 rule” dominates success: 80% planning with comprehensive product requirements, 20% execution. Project-specific rules files (.cursor/rules, .windsurfrules) dramatically improve output quality by encoding team standards and architectural patterns.

Agentic systems like Devin represent autonomous capability. Devin achieved 13.86% resolution of real-world GitHub issues on SWE-bench (vs. 1.96% unassisted baseline), powering enterprise migrations at companies like Nubank with 12x efficiency improvements and 20x cost savings. These systems work best for repetitive large-scale work—data migrations, documentation updates, test generation—not production bugs requiring nuanced understanding. The critical pattern: human checkpoints at planning and PR stages maintain safety while capturing speed benefits. Mobile access via Slack enables on-call engineers to delegate urgent tasks to agents working asynchronously.

Team adoption patterns show clear maturity stages. AI Newbies (57% of teams) use AI only for content creation, maintaining traditional workflows. Pragmatists (35%) blend human-AI collaboration effectively across generation and workflow automation. Vibe Coders (4%) heavily generate code but lack systematic integration. AI Orchestrators (<4%) represent enterprise maturity with AI-driven workflows and systematic process integration. The adoption gap matters: directors show 38% adoption while managers lag at 17%, creating bottlenecks when those controlling processes resist tools their teams need.

Real productivity data contradicts simple narratives. Accenture’s randomized controlled trial showed 55% faster task completion with 90% job fulfillment increases. Zoominfo’s 400+ developer deployment achieved 72% satisfaction with median 20% time savings. But METR’s rigorous study of experienced developers in mature codebases found 19% slowdown—developers predicted 24% speedup but actually slowed down. Context determines outcomes: simple tasks show >30% gains, complex tasks <10% gains. Junior developers benefit most from speed; experienced developers value automation of repetitive work over raw productivity gains. The perception-reality gap means technical leaders must measure objectively, not rely on self-reported productivity.

What’s actually breaking in production

The rapid adoption creates systematic problems that technical leaders must anticipate and mitigate. Research analyzing 211 million lines of code reveals fundamental quality challenges emerging from AI assistance.

Code quality degradation follows predictable patterns. Copy-pasted code rose from 8.3% to 12.3% between 2021-2024, while refactoring dropped from 25% to under 10%. AI generates complete but bloated solutions, importing entire new packages when existing ones suffice, violating DRY principles systematically. Experimental codepaths multiply—AI’s iterative nature creates conditional branches that increase complexity and remain as forgotten dead code. The “almost right, but not quite” problem frustrates 66% of developers: code that compiles and looks syntactically correct but contains subtle semantic flaws or lacks proper error handling. GitClear’s analysis finds AI “violates the DRY-ness of repos visited,” creating maintainability crises where any change requires editing dozens of files—classic “shotgun surgery” antipatterns.

Security vulnerabilities plague AI-generated code at alarming rates. Georgetown CSET’s November 2024 study found nearly 50% of code snippets from five major LLMs contained exploitable bugs. The vulnerability rate has remained flat at ~40-45% for two years despite model improvements. Stanford research found developers with AI assistants wrote significantly less secure code than those without access. The vulnerability patterns are predictable: missing input validation appears most frequently (SQL injection 20% failure rate, log injection 88% insecure rate, XSS 12% insecure), improper authentication/authorization with hard-coded credentials, and dependency explosion creating attack surface expansion. Novel AI-specific risks compound traditional vulnerabilities: hallucinated dependencies enable “slopsquatting” attacks where malicious actors register non-existent package names AI suggests; stale libraries reintroduce CVEs patched after training cutoffs; training data contamination means 81% of open-source code used for training contains vulnerabilities. Critically, 68% of developers now spend MORE time resolving security vulnerabilities than before AI adoption.

Architectural drift accelerates dangerously. vFunction’s 2025 study of 600+ IT professionals found 93% experienced negative business outcomes from misalignment between implemented architecture and documented standards. Only 36% of engineers believe documentation matches production. AI doesn’t understand organizational architecture, design patterns, or scaling goals—it generates contextually “correct” code without considering existing patterns, system-wide consistency, or long-term maintainability. As conversations progress, architectural constraints from session start become deprioritized. The “Lost in the Middle” problem means LLMs degrade performance for information in middle positions of long contexts. What traditionally took years to accumulate now happens in sprints due to AI’s speed multiplier effect on architectural violations.

Technical debt accumulates faster than teams can manage. Academic research identified 72 antipatterns in AI-based systems, including four new debt types: data debt (quality/availability), model debt (selection/maintenance), configuration debt (management complexity), and ethics debt (responsible deployment). The most frequent AI-specific antipattern is dead experimental codepaths. The “70% problem” emerges consistently: AI generates impressive prototypes reaching ~70% completion quickly, then hits a wall where remaining 30% requires deep engineering knowledge non-engineers lack. Each fix introduces new issues due to absence of holistic understanding. Real example from Reddit: a developer three months into AI-assisted project reached a point where any change required editing dozens of files, indicating severe technical debt already accumulated.

Over-reliance creates dangerous knowledge gaps. Stack Overflow’s 2024 survey found only 42% trust AI accuracy, yet 38% report AI provides inaccurate information 50%+ of the time. The paradox: developers accept code they don’t fully understand because it compiles and passes tests. Junior developers particularly lack mental models to understand what’s wrong when bugs appear, cannot reason about causes, and keep returning to AI rather than developing expertise. The cognitive shortcut that makes AI accessible actually impedes learning. Acceptance rates serve as poor metrics when they count problematic acceptances equally with good ones, creating perverse incentives to accept more rather than review critically.

SDLC integration failures multiply organizational friction. Writer’s survey found 75% of company leaders thought AI rollout successful but only 45% of employees agreed. Almost half of C-suite executives say adoption is “tearing their company apart.” Policy gaps exacerbate problems: 73% of developers don’t know if companies have AI policies. Apiiro’s 2024 research revealed alarming integration failures: AI-generated code introduced 322% more privilege escalation paths, 153% more design flaws, and merged into production 4x faster than regular commits—insecure code bypassing normal review cycles. Misguided management compounds problems through OKRs tracking AI usage without regard for value, publicizing individual code generation metrics, creating leaderboards, and mandating tools without understanding workflows. The Google DORA report quantifies the impact: 7.2% decrease in delivery stability with increased AI use despite 75% of developers feeling more productive.

Building effective quality gates for AI code

Addressing these systemic problems requires multi-layered defense combining automated analysis, AI-powered review, human oversight, and security-first practices.

Automated static analysis forms the foundation layer. SonarQube supports 35+ languages with AI-powered issue prioritization, automatically blocking deployments when quality metrics aren’t met—free Community Edition scales to small teams, paid tiers ($720+/year) add compliance. DeepSource offers autofix capabilities with one-click resolution and code health tracking over time, integrated natively with GitHub/GitLab ($8-24/user/month). Semgrep provides lightweight open-source pattern matching with custom security rules, fast scans without builds, and extensive community rulesets. CodeQL treats code as queryable data for semantic analysis identifying zero-days, free for open-source but requiring GitHub Advanced Security for private repos. These deterministic tools catch objective violations before more sophisticated analysis begins.

AI-powered code review platforms add contextual intelligence. Qodo Merge uses RAG (Retrieval-Augmented Generation) for deep codebase context, providing automated PR descriptions, risk-based diffing highlighting critical changes, and specialized agents sharing codebase intelligence ($15/user/month). CodeRabbit delivers codebase-aware reviews using AST analysis, auto-learning from user feedback via MCP integration, running static analyzers and security tools automatically with SOC2 Type II certification ($24/month Pro). Graphite Agent focuses on minimal false positives with real-time bug detection and privacy-first approach (doesn’t store or train on data). Aikido Security generates custom rules learning from past PRs with AI-driven SAST, secrets detection, and compliance monitoring (SOC 2, GDPR, HIPAA). These platforms understand code relationships beyond line-by-line analysis.

Human-in-the-loop patterns maintain critical oversight. Microsoft’s production implementation uses AI as first reviewer for style, bugs, null references, and inefficient algorithms, while humans focus on architectural and business logic concerns—significantly reducing review time while maintaining quality. Checkpoint-based workflows implement risk thresholds: low-risk changes auto-resolve, high-risk route to human review with state persistence enabling resumption after approval. AWS Augmented AI (A2I) provides structured human loops with confidence score thresholds determining review triggers. LangGraph enables interrupt functions pausing execution for human input with persistent state across interruptions. The pattern succeeds when AI handles repetitive verification, humans provide judgment and context.

Multi-agent review systems scale inspection across dimensions. CodeAgent’s 2024 research demonstrated specialized agents for different review aspects with QA-Checker supervisory agent preventing “topic drift.” Production implementations deploy parallel agents: Quality Agent for structure and maintainability, Security Agent for vulnerabilities and secrets, Performance Agent for N+1 queries and inefficient algorithms, Documentation Agent for completeness. Zero-copy database forks enable 4x faster analysis through parallel agent execution with minimal storage overhead. Qodo’s multi-agent architecture coordinates Gen (code generation), Cover (test coverage), and Merge (PR review) agents sharing org-wide context for consistency.

Security scanning requires specialized tooling. Snyk’s DeepCode AI combines symbolic AI and generative AI trained on 25M+ data flow cases, achieving 80% accurate autofixes with no hallucinations, real-time in-IDE vulnerability detection, and context-aware risk scoring. The Snyk MCP Server for Cursor enables zero-setup interoperability with real-time security during AI code generation. Parasoft focuses on safety-critical systems with ML-prioritized violations and LLM integration for specific recommendations. For lightweight needs, Semgrep’s free tier provides adequate security pattern matching. All scanning must integrate at multiple points: real-time IDE feedback, pre-commit hooks, PR-level analysis, CI/CD blocking, and continuous monitoring.

Compliance and architecture validation address organizational requirements. GitHub branch protection with required checks validates architecture decision records (ADRs), import patterns, layering rules, and dependency directions. Custom GitHub Actions enforce organizational standards automatically. SonarQube custom rules and ArchUnit (Java) provide test-based architecture validation. AI Bill of Materials tracking (Wiz AI-SPM) catalogs models, datasets, tools, and third-party services for compliance and security understanding. Aikido automates SOC 2, GDPR, and HIPAA monitoring in development workflows.

Practical GitHub Actions implementation enables rapid deployment. The AI Code Review Action supports multiple providers (OpenAI, Anthropic, Google, Deepseek, Perplexity) with file pattern inclusion/exclusion for $5-20/month typical API costs. Label-triggered workflows control costs by reviewing only flagged PRs, reducing expenses 80-90%. Semgrep full scans run weekly with SARIF output uploaded to GitHub Security, while differential scans analyze only PR changes in 30-60 seconds. Pre-commit hooks ensure checks run even for web-based PRs where local hooks don’t execute. Complete quality gate workflows combine linting, security scanning, test coverage, static analysis, and AI review in parallel jobs with clear pass/fail criteria.

Cost-optimized implementation tiers balance thoroughness with budget. Free tier (for all PRs) includes pre-commit hooks, ESLint/Prettier, Semgrep SAST, GitHub CodeQL (public repos), and basic Dependabot. Low-cost tier ($10-30/month) adds Codecov, Snyk free plan (200 tests/month), and SonarCloud. Premium tier (label-triggered) reserves AI code review, advanced SAST, and license checking for complex PRs. Implementation follows weekly priorities: Week 1 sets up pre-commit hooks and linting, Week 2 adds Semgrep security, Week 3 implements PR labeling and automation, Week 4 adds test coverage, Week 5+ experiments with AI review for complex cases.

Tool selection by team size optimizes resource allocation. Small teams (<10 developers) need GitHub Actions with AI Code Review Action, SonarQube Community, Semgrep, and pre-commit hooks ($0-10/month). Mid-size teams (10-50) benefit from Qodo Merge or CodeRabbit, Snyk Code, SonarQube Team Edition, and dedicated CI/CD integration ($50-100/month). Large enterprises (50+) require multi-agent systems, Snyk Enterprise with Agent Fix, SonarQube Enterprise, custom AI review pipelines, and human-in-loop workflows with checkpoints ($100+/month per developer).

What enterprises are learning from production deployments

Real-world implementations reveal critical success factors and common pitfalls that academic research cannot capture.

Zoominfo’s systematic 400-developer rollout demonstrates methodical adoption. Their four-phase approach began with five engineers piloting for one week (8.8/10 satisfaction, 8.6/10 productivity improvement), expanded to 126 engineers (32% of developers) for two-week trial with 72 survey respondents (8.0/10 satisfaction, 7.6/10 productivity), then full rollout via ServiceNow workflow. The quantitative results after one year: 33% average acceptance rate, 6,500 suggestions daily generating 15,000 lines, with TypeScript/Java/Python showing ~30% acceptance while HTML/CSS/JSON lagged at 14-20%. Developer satisfaction reached 72% (highest of all tools), 90% reported time savings (median 20%), 63% completed more tasks per sprint, and 77% improved work quality. The critical learning: acceptance rate correlates better with perceived productivity than raw velocity metrics.

Accenture’s randomized controlled trial provides rigorous experimental evidence. Developers with GitHub Copilot access showed 81.4% installed IDE extension same day, 96% started accepting suggestions same day, average one minute from first suggestion to first acceptance, and 67% used at least 5 days/week. Code quality and velocity improved simultaneously: 8.69% increase in pull requests (throughput), 15% increase in PR merge rate (quality), 84% increase in successful builds, 90% committed AI-generated code, 91% merged PRs containing AI code, and 88% character retention rate in editor. Developer experience transformation emerged powerfully: 90% felt more fulfilled with their job, 95% enjoyed coding more, 70% reported less mental effort on repetitive tasks, and 54% spent less time searching for information—all while maintaining flow state. The study conclusively demonstrates GitHub Copilot doesn’t sacrifice quality for speed.

Microsoft customer deployments across industries reveal patterns. Access Holdings (financial services) reduced coding time from 8 hours to 2 (75% reduction), chatbot launches from 3 months to 10 days (90% reduction), and presentations from 6 hours to 45 minutes (87% reduction). Allpay (payment services) achieved 10% productivity increase with 25% increase in delivery volume to production. Stacks (accounting automation) built their entire platform with 10-15% of production code generated by Gemini Code Assist, reducing closing times through automated reconciliations. The pattern: largest gains in repetitive or well-defined tasks, modest gains in complex problem-solving, transformation in developer satisfaction universal across deployments.

Market research quantifies the landscape. Stack Overflow’s 2024 survey of 65,000+ developers found 76% using or planning to use AI tools (up from 70% in 2023), but favorability declined from 77% to 72%. Only 43% trust AI accuracy while 79% cite misinformation as top ethical concern. Usage patterns show 82% use AI for writing code, 46% interested in testing but hesitant, and 70% of professionals don’t see AI as job threat. Geographic variations matter: India shows 59% trust and 75% favorability, while Germany (60% favorable), Ukraine (61%), and UK (62%) lag significantly. GitHub research reveals it takes 11 weeks for users to fully realize satisfaction and productivity gains—26.4% raw adoption increase within two weeks with proper enablement. The acceptance rate (25-35% considered healthy) best predicts perceived productivity across all SPACE dimensions.

DX research identifies critical success factors. High-impact use cases ranked by time savings: stack trace analysis, refactoring existing code, mid-loop code generation, test case generation, and learning new techniques. The fundamental insight: “AI-driven coding requires new techniques many developers do not know yet.” Teams without proper training see minimal benefits; teams with structured education see transformative gains. Advanced techniques that matter include meta-prompting (embedding instructions within prompts), prompt chaining (output feeds next step), and context management (understanding how to provide relevant context). Organizations treating AI code generation as process challenge rather than technology challenge achieve 3x better adoption rates.

Gartner predictions warn against overoptimism. While 75% of enterprise software engineers will use AI code assistants by 2028 (up from <10% early 2023), Philip Walsh’s reality check matters: even with 50% faster coding, expect only 10% overall cycle time improvement because coding represents only ~20% of total SDLC. The warning against top-down mandates resonates: “What we do not recommend is some kind of top-down productivity mandate. That doesn’t work.” Organizations need learning cultures with experimentation freedom and tolerance for failure to achieve optimal adoption.

Andreessen Horowitz’s CIO survey reveals market dynamics. Model API spending doubled from $3.5B to $8.4B with shift from development to production inference. Code generation represents AI’s first breakout use case with $1.9B ecosystem in one year. Anthropic captures 32% enterprise market share (top player) with Claude holding 42% for code generation specifically—driven by code excellence. Claude Sonnet 3.5 (June 2024) enabled new categories: AI IDEs (Cursor, Windsurf), app builders (Lovable, Bolt, Replit), and enterprise coding agents (Claude Code, All Hands). Innovation budgets dropped from 25% to 7% of AI spending as AI centralized in IT and business unit budgets—”Gen AI is no longer experimental but essential to business operations.”

Failure patterns provide cautionary lessons. Writer’s enterprise survey found 42% of C-suite say AI adoption is “tearing their company apart” despite 88% of employees and 97% of executives benefiting. Organizations with formal AI strategy achieve 80% success rate vs. 37% without strategy. The IT/department friction appears in 68% of executives’ reports, with 72% observing siloed AI development. Successful organizations like Qualcomm systematically vetted 25+ use cases, defined 70 workflows, and saved ~2,400 hours/month across users. Salesforce empowered 50 champions across organizations building apps with no-code options, Python frameworks, and APIs. The pattern: systematic approach with clear use cases succeeds; scattered tool adoption without strategy fails.

What researchers are discovering in controlled studies

Academic research from 2023-2025 provides empirical rigor beyond industry case studies, revealing fundamental capabilities and limitations.

Productivity measurement studies reveal context-dependent outcomes. GitHub Copilot research by Ziegler et al. (ACM Communications 2024) analyzed 2,047 developers with matched telemetry, finding acceptance rate strongest predictor of perceived productivity (r≈0.5) with developers accepting ~29% of suggestions averaging 312+ daily completions. Acceptance varies by time: 38% during work hours, 42% evenings, 43% weekends, with junior developers showing higher acceptance and greater productivity gains. METR’s rigorous 2025 randomized controlled trial shockingly found 19% slowdown for experienced developers using Cursor Pro with Claude 3.5/3.7 on 246 real tasks in mature open-source projects (>1M lines). Developers predicted 24% speedup before study, estimated 20% after, but actually slowed down—experts predicted 38-39% speedup but were incorrect. Developers accepted <44% of AI code, 75% read every line, and 56% made major modifications to clean up output. The critical implication: results apply to experienced developers in large mature codebases with strict quality standards; don’t assume automatic productivity gains without rigorous measurement.

Code quality research demonstrates capability boundaries. ICSE 2024 study by Du et al. found all 11 tested LLMs performed significantly worse on class-level vs. method-level code generation. Most models performed better generating methods incrementally vs. entire classes, with limited ability to generate dependent code correctly. The AST 2024 GitHub Copilot test generation study found with existing test suite 45.28% passing tests but only 7.55% passing without existing tests—code commenting strategies significantly impacted quality. The automotive industry case study (AMCIS 2024) measured using SPACE framework: 10.6% increase in pull requests, 3.5-hour reduction in cycle time (2.4% improvement). Google DORA Report quantifies the gap: 75% felt more productive but reality showed every 25% increase in AI adoption produced 1.5% dip in delivery speed and 7.2% drop in system stability. Only 39% trust AI-generated code despite widespread usage.

Security research identifies persistent vulnerabilities. Georgetown CSET’s November 2024 report analyzing five LLMs found nearly 50% of code snippets contained exploitable bugs, with vulnerability rates flat at 40-45% for two years. CodeSecEval study (arXiv:2407.02395, 2024) by Anthropic/OpenAI/Google researchers showed only 55% of AI-generated code was secure across 80 tasks, 4 languages, 4 vulnerability types. Security performance hasn’t improved meaningfully even as syntactic correctness has—larger/newer models don’t generate significantly more secure code. Data poisoning research (ICPC 2024) by Natella et al. demonstrated AI generators vulnerable to targeted poisoning with <6% poisoned training data. The most frequent vulnerabilities align with CWE Top 25: missing input validation (SQL injection 20% failure, log injection 88% insecure, XSS 12% insecure), improper authentication/authorization with hard-coded credentials, and dependency-related issues including hallucinated packages enabling “slopsquatting” attacks.

Human factors research reveals adoption complexities. CHI 2025 IBM watsonx Code Assistant study (N=669) found net productivity increases but not universal—benefits unequally distributed with new considerations around code ownership and responsibility. Expectations of speed and quality often misaligned with reality. Large-scale ICSE 2024 usability survey identified successes (automation of boilerplate, quick prototyping, learning assistance) and challenges (trust issues, verification burden, context limitations) with gap between marketing promises and actual utility. The trust paradox manifests clearly: 75% feel more productive while 39% have little/no trust in output. Cognitive load research (arXiv:2501.02684) showed AI impacts cognitive load differently by expertise—less experienced developers showed higher adoption and greater perceived gains while experienced developers reported different cognitive effects not necessarily reducing load. Proactive AI agents increase efficiency vs. prompt-only but incur workflow disruptions requiring balance.

Experience and expertise effects complicate simple narratives. Junior developers show highest acceptance rates, greater perceived productivity gains, feel AI helps write better code, and use for learning and skill development. Experienced developers show lower acceptance rates, more selective usage, value for automation/unfamiliar domains/repetitive tasks, less likely to report “writing better code,” more critical evaluation of suggestions, and better error/pattern detection. Language proficiency positively predicts staying in flow and focusing on satisfying work (r=0.18, p<0.01) while years of experience negatively predicts feeling less frustrated (r=-0.15, p<0.01) but positively predicts making progress in unfamiliar languages (p<0.05). Over-reliance risks emerge particularly for research code which is often untested with developers undertrained in software development—risk of accepting undetected errors particularly high with metacognitive demands requiring maintained critical evaluation skills.

Institutional research contributions shape the field. Microsoft Research powers GitHub Copilot, VSCode, and Visual Studio with research on retrieval-augmented and program analysis models. Debug-Gym (2025) achieved 30% success rate improvement for Claude 3.7, 182% for OpenAI o1, and 160% for o3-mini in interactive debugging. GitHub Next studies acceptance rates as productivity predictor across enterprises with Copilot Metrics API for organizational measurement. Google Research contributed Gemini Code Assist, Codey APIs, and five best practices documentation: plan before coding, use right tool for task, provide context files, iterate on requirements, review and validate outputs. Academic institutions produced key papers: Fudan University’s ClassEval benchmark, Delft University’s test generation studies, Georgetown CSET security vulnerability research, MIT/Princeton/UPenn multi-company productivity studies, University of Naples data poisoning vulnerability research, and Carnegie Mellon human factors research through CHI proceedings.

Best practices emerge from empirical evidence. Organizational implementations require training in AI-driven coding techniques many developers don’t know yet—meta-prompting, prompt chaining, context management. Multi-tool strategy provides complementary capabilities: conversational AI for exploration, IDE-integrated for in-flow assistance, code-specific models for generation—using multiple tools together yields 1.5-2.5x additional improvement. Governance frameworks must define appropriate use cases, establish coding standards alignment, create tailored review processes, specify usage guidelines, and address privacy/data handling. Measurement frameworks need leading indicators (developer satisfaction, acceptance rate telemetry) and lagging indicators (pull request throughput, cycle time, defect rates) using SPACE dimensions. Don’t rely solely on self-reported productivity—verify with objective metrics. Developer-level practices require always reviewing AI code as untrusted third-party code, focusing review on security/performance/edge cases/architectural fit, running comprehensive test suites, using automated security scanning (SAST/DAST/SCA), being specific with language/libraries/constraints/expectations in prompts, providing context with relevant code/architecture/conventions, iterating on requirements before generating, breaking complex tasks into smaller subtasks, and creating execution plans.

How to govern AI coding without bureaucracy

The governance challenge is counterintuitive: organizations needing governance most are least able to implement it effectively. The solution isn’t faster governance—it’s simpler governance.

The lightweight governance framework succeeds through three core principles. First, make AI-generated code obvious with clear commenting: “AI-GENERATED: Claude 3.5 Sonnet (2024-10-22) / REVIEWED-BY: Sarah Chen (2024-10-25) / RATIONALE: Needed quick implementation for edge case handling.” Second, require architecture-aware review where someone understanding architectural context validates every AI-generated change—not just syntax checking but architectural alignment verification. Third, track the metric that matters: not acceptance rates or lines of code per hour but how often AI-generated code needs rewriting >30 days after shipping. Focus on code longevity and maintainability over velocity.

GitHub’s enterprise governance model (Agent Control Plane, October 2025) provides production-tested patterns. Consolidated administrative view monitors all AI activity with 24-hour session visibility, filtering by agent type and task state (completed, cancelled, in progress). MCP allowlist management via registry URL controls tool access. Push rules protect static file paths for custom agents. Fine-grained permissions control AI administrative access. Policy framework operates at three layers: enterprise level controls licensing and feature availability, organization level manages tool enablement and public code matching policies, repository level defines custom agents and security rules. Key features include suggestion matching policies for public code, IP indemnity protection for copyright claims, feedback collection controls, model selection policies, and extension/integration approval workflows.

Practical governance checklist provides implementation roadmap. Foundational artifacts include adoption policy with clear guidelines on when/how to use AI tools, prompt usage guidelines with best practices, secret and credential blocklist preventing hardcoded credentials, license utilization tracking monitoring seat assignments, telemetry review cadence for regular effectiveness analysis, rejected suggestion audit sampling to understand developer decisions, and enablement playbooks with role-specific guidance. Security-specific controls enforce authentication/authorization checks for hardcoded credentials and OAuth scope validation, data validation scanning for SQL injection/XSS prevention/input sanitization, and cryptography verification for approved algorithms/key lengths/secure random generation.

The two-page document approach replaces comprehensive frameworks. Use one pilot team testing AI tools. Measure: can they ship faster while maintaining defect rates? Document what works in a two-page guide readable in five minutes. Iterate and expand based on actual results, not theoretical frameworks. This pragmatic pattern appears consistently across successful implementations—complexity kills adoption velocity.

Policy framework structure balances comprehensiveness with usability. Purpose establishes core objective maintaining data security and code integrity. Scope clarifies applicability to all developers with defined boundaries and approved tools. Responsible use emphasizes AI as aid not substitute with developers accountable for quality meeting existing standards. Intellectual property addresses IP law compliance with features preventing public code copying and mitigating copyleft contamination risk. Output validation mandates scrutiny with security risk assessment and project requirements alignment verification. Performance monitoring enables ongoing effectiveness assessment with quality tracking and issue identification. Documentation maintains detailed records tracking user, purpose, and manner of usage. Training requirements ensure adequate developer training understanding capabilities, limitations, and policy principles. Policy review commits to regular updates maintaining currency with evolving technologies.

License and legal considerations require attorney relationships. AI trained on open-source code with copyleft licenses (GPL) creates code similarity risks triggering forced open-sourcing of entire codebases. Mitigation strategies include using AI tools with transparent training data policies, running Software Composition Analysis tools for license violations, maintaining lawyer relationships for open-source licensing issues, and preferring AI tools with clear copyleft code handling policies. Philips demonstrates practical philosophy: developers granted wide GitHub Copilot access with full responsibility for implementing suggestions—AI code must meet same quality requirements as human-written code with understanding as prerequisite for highest quality and safety.

Training and enablement focus on appropriate boundaries. The philosophy shift matters: teach not how to use AI coding assistants but when NOT to use them. Junior developers benefit from AI for implementing quick features but AI proves terrible for learning new frameworks creating false competency—focus on building judgment and understanding, not just shipping code. Mid-level developers achieve greatest productivity gains with sufficient experience to evaluate and refine AI-generated code, effectively assessing quality and architectural fit. Senior developers gain smallest incremental gains (already highly proficient) but AI excels for exploring unfamiliar APIs while proving dangerous for architectural decisions lacking context on scaling and maintenance. The verification test principle: use AI only for tasks where you can verify output correctness in less time than doing it yourself.

Multi-pronged learning journey delivers sustained adoption. Kickoff events address purpose and strategy, explain technology in team context, and set expectations. Vendor-driven training provides asynchronous sessions with tool-specific tutorials and vendor best practices. Informal learning forums including office hours, town halls, and peer-to-peer sessions (most valuable) enable knowledge sharing. Individual experimentation time protects learning without deadline pressure through learning sprints (1-2 weeks) with 50% reduced priorities accommodating productivity dip. Role-specific customization tailors training by role, tenure, coding activity, and application type avoiding overpromising benefits to cohorts seeing limited value.

Metrics framework measures what matters. Adoption metrics track pull requests with AI code (baseline indicator), daily active users (consistent engagement), and lines generated and accepted (volume) with segmented analysis identifying which developers/teams adopt fastest, adoption trajectory, repository-level patterns, and use case distribution. Benefits metrics monitor velocity indicators (coding time target 20-30% reduction, merge frequency, cycle time, deployment frequency) and business outcomes (lead time, completed stories, planning accuracy, time to market). Risk/quality metrics assess code quality (PR size, rework rate for changes <21 days old, code churn <2 weeks after authoring, defect density, escaped bugs, technical debt), review process (PRs merged without review as high-risk indicator, review depth, time to approve, review time), and security (vulnerability detection rate, security incident count, compliance violations). Model performance uses computation-based metrics for bounded outputs (precision, recall, F1 score) and model-based for unbounded outputs (coherence, relevance, fluency, factual accuracy).

Implementation patterns balance measurement with pragmatism. LinearB’s label-based approach enables filtering dashboards by AI labels vs. baseline through manual labeling or workflow automation (gitStream) with auto-labeling based on user lists, hint text, or yes/no buttons. Blue lines track AI-assisted PRs while gray lines track baseline. BCG’s 360-degree framework captures basic metrics (adoption rates, usage data, acceptance rates, code volume, time saved), engineering metrics (cycle times, code quality, test coverage), operational metrics (deployment frequency, MTTR, system reliability), and developer experience (satisfaction scores, onboarding time, skills development) with biweekly pulse checks enabling quick pivots. The critical insight: avoid the “code churn double” problem where GitClear research predicts code churn doubling in 2024 with AI adoption as refactoring drops and copy/pasted code rises—simply measuring output volume risks technical debt accumulation and skill atrophy.

Critical implementation roadmap for technical leaders

Success requires systematic phased implementation balancing speed with sustainability, starting with targeted pilots and scaling based on measured results.

Phase 1: Foundation (Months 1-3) establishes infrastructure and validates approach. Start with one pilot team mixing experience levels on greenfield projects or new features. Establish baseline metrics before AI introduction defining success criteria upfront. Choose right use cases: new code in popular languages (JavaScript, Python, Java), test generation and documentation, boilerplate and scaffolding code—avoid legacy codebase refactoring or complex debugging initially. Establish lightweight governance with two-page policy maximum, clear marking of AI-generated code, architecture-aware review requirements, and single metric focus on code longevity. Set up measurement infrastructure with PR labeling system (manual or automated), baseline metrics capture, dashboards for adoption/benefits/risks, and regular review cadence (biweekly pulse checks).

Phase 2: Learning and Iteration (Months 4-6) scales knowledge and refines practices. Implement structured training with role-specific learning paths, peer-to-peer sharing sessions, protected learning time (learning sprints), and focus on “when not to use AI.” Refine governance based on data tracking what works via metrics, adjusting policies based on actual issues not theoretical ones, documenting successful patterns, and sharing learnings across teams. Address security and compliance by integrating SAST/DAST into CI/CD, implementing SCA for license violations, establishing security review protocols for AI code, and creating escalation paths for issues. Scale gradually expanding to 2-3 additional teams, different project types for diverse learning, maintaining central documentation of patterns, and building internal champions network.

Phase 3: Scaling (Months 7-12) achieves organization-wide adoption. Organization-wide rollout uses tiered access based on readiness, mandatory training before tool access, clear escalation and support paths, and continuous feedback mechanisms. Advanced governance implements GitHub Enterprise AI Controls or equivalent, policy enforcement automation (gitStream, etc.), audit trail requirements, and compliance reporting mechanisms. Optimize value capture by reallocating time saved to high-value work, measuring business outcomes (time to market, customer satisfaction), calculating and reporting ROI, and adjusting resource allocation and hiring plans. Continuous improvement maintains regular policy reviews (quarterly minimum), stays current with AI model evolution, adapts to new capabilities and risks, and builds communities of practice for sharing.

Tool selection decision tree optimizes technology choices. Choose chat-based (ChatGPT/Claude) when needing external mentor for architecture discussions, working on complex debugging across large codebases, requiring explanations and learning support, or not needing IDE integration. Choose IDE-integrated (Copilot/Cursor/Windsurf/Cline) when wanting inline real-time assistance, needing project-wide code generation, valuing seamless workflow integration, or requiring frequent code completion. Choose agentic (Devin/Claude Code) when having end-to-end autonomous workflows ready, can provide clear requirements and complete context, need 24/7 execution capability, or have strong governance and checkpoints in place.

Critical success factors define execution. DO: start simple with lightweight governance, focus on outcomes (defect rates, longevity) not activity (lines of code), require architectural review of all AI code, make AI-generated code clearly marked, provide role-specific training, measure end-to-end business impact, protect learning time for developers, build on DevOps/CI/CD foundation, integrate security from start, and iterate quickly based on data. DON’T: create comprehensive frameworks before piloting, measure only acceptance rates or velocity, skip security review because “AI checked it,” over-rely on AI for architectural decisions, force adoption without training, ignore learning curve productivity dip, let junior developers learn frameworks via AI, sacrifice quality for speed, deploy without proper DevSecOps foundation, or make governance more complex than necessary.

Organizational prerequisites enable success. Technical requirements include mature CI/CD pipelines (43%+ automation), DevSecOps practices in place, version control and code review processes, testing automation foundation, and monitoring and observability systems. Cultural requirements demand trust-based developer relationships, blameless post-mortem culture, continuous learning mindset, cross-functional collaboration, and willingness to experiment and iterate. Operational requirements need executive sponsorship and support, budget for tools and training, time allocation for learning, clear communication channels, and feedback mechanisms.

Risk mitigation strategies address predictable challenges. Technical risks require mandatory code review for all AI code, tracking rework rates as leading indicator, monitoring technical debt accumulation, establishing quality gates in CI/CD for code quality issues, integrating security scanning in development phase with automated PR vulnerability detection, regular AI code security audits, and incident response plans for AI issues addressing security vulnerabilities. Organizational risks need balancing AI usage with skill development, preventing over-reliance by junior developers, maintaining coding fundamentals training, and regular developer capability assessment addressing skill atrophy while establishing clear use case boundaries, training on AI limitations, requiring human understanding of all code, and keeping architecture decisions human-driven addressing over-reliance. Process risks demand scaling testing automation with code velocity, increasing review capacity proactively, automating deployment processes, monitoring emerging bottlenecks addressing pipeline bottlenecks, establishing non-negotiable quality standards, measuring outcomes not just output, balancing speed with sustainability, and regular quality assessments addressing quality vs. velocity tradeoffs.

Future evolution trajectory prepares for coming capabilities. Near-term (2025-2026) brings orchestrated agents auto-generating user stories/code/tests with human approval rationale dashboards, agentic development with multi-agent SDLC coordination, and enhanced context awareness understanding full application architecture. Medium-term (2027-2028) enables complete application creation from high-level descriptions with humans focusing on requirements/business logic, autonomous testing evolution with self-maintaining test suites, and predictive development suggesting features based on user behavior. Long-term (2029+) introduces quantum computing integration enhancing AI capabilities, AI-driven product evolution with self-optimizing applications, and human role transformation to strategists and architects focusing on business value with verification/validation as primary activities.

The bottom line for technical leaders

AI-assisted coding represents a fundamental transformation requiring organizational change, not just tool deployment. The data demonstrates clear benefits—10-30% productivity improvements, 70-95% developer satisfaction increases, measurable quality improvements with proper governance—but only for organizations approaching systematically.

What actually works: lightweight governance (two-page policies), outcome focus (code longevity and business impact not lines generated), developer empowerment with accountability, comprehensive training emphasizing boundaries, continuous measurement with objective metrics, security integration from start, and business alignment connecting AI adoption to measurable outcomes. Organizations implementing these patterns capture most value.

What fails: comprehensive frameworks before piloting, top-down mandates without developer input, measuring only velocity metrics, skipping security review, forcing adoption without training, ignoring learning curves, sacrificing quality for speed, deploying without DevSecOps foundation, creating bureaucratic governance, and making simplistic ROI assumptions.

The competitive window narrows: by 2028, 75% of enterprise software engineers will use AI coding assistants. Organizations mastering adoption now build competitive advantage while others figure out basics. The market moved from $5.5B (2024) toward $47.3B (2034) with productivity gains of 25-30% achievable by 2028 for teams using AI throughout SDLC. But success demands treating integration as organizational transformation with systematic process adaptation, comprehensive training, pragmatic governance, continuous measurement, and cultural evolution.

The governance paradox resolves: the best AI governance is governance you barely notice because it’s embedded in daily workflows, automated where possible, and focused on enabling developers to build better software faster. Those building elaborate frameworks will perpetually lag. Technical leaders who embrace lightweight, iterative governance while investing in their people capture greatest value. The future belongs to organizations governing tools moving faster than traditional processes—making this the most important technical leadership challenge of 2025-2028.

AI Coding Assistants - Research & Resources

A comprehensive collection of research studies, implementation guides, and tools for AI-assisted software development.

Core Research & Empirical Studies

- METR - AI Impact on Experienced Developers - Rigorous RCT showing 19% slowdown for experienced developers

- ACM - Measuring GitHub Copilot’s Impact on Productivity - Academic study on productivity metrics and acceptance rates

- Zoominfo Case Study (arXiv) - Real-world 400+ developer deployment results

- GitHub - Accenture Enterprise Study - RCT showing 55% faster completion with quality improvements

- Georgetown CSET - Cybersecurity Risks of AI Code - Security vulnerability analysis (50% exploitable bugs)

- arXiv - CodeSecEval Security Study - Only 55% of AI code is secure across major LLMs

Market Analysis & Surveys

- Stack Overflow 2024 Developer Survey - AI Section - 65K+ developers on AI tool usage and trust

- Andreessen Horowitz - Enterprise AI in 2025 - 100 CIOs on AI adoption and market dynamics

- Menlo Ventures - 2025 LLM Market Update - Market sizing and code generation economics

- Writer - Enterprise AI Adoption Survey - Gap between leadership and employee perspectives

- GitHub - AI Wave Survey - Developer adoption trends and patterns

Implementation Guides & Best Practices

- DX - Enterprise AI Code Adoption Guide - Comprehensive implementation framework with phased approach

- Augment Code - AI Governance Framework - Policy structures and compliance patterns

- GitHub Resources - AI Governance Framework - Official GitHub governance documentation

- Google Cloud - Five Best Practices for AI Coding - Plan-code-validate workflow

- DX - Collaborative AI Coding Guide - Team workflow integration strategies

Problem Analysis & Quality Issues

- Medium - Coding on Copilot (GitClear) - Data on code quality degradation and churn

- Cerbos - Productivity Paradox - Analysis of perception vs. reality gap

- LeadDev - AI Coding Mandates Issues - Organizational friction and burnout

- O’Reilly - AI-Resistant Technical Debt - Long-term maintainability challenges

- Substack - The 70% Problem - Incomplete prototypes and finishing challenges

Tools & Automation

- Qodo - Automated Code Review Tools - Comprehensive tool comparison

- Graphite - AI Code Review Tools 2024 - Open-source review tooling landscape

- SonarSource - Code Review Solutions - Static analysis and quality gates

- Snyk - Securing GenAI Development - Security scanning integration

- GitHub Marketplace - AI Code Review Action - Practical GitHub Actions implementation

- Semgrep Guide (0xdbe) - Security scanning setup tutorial

Security & Vulnerabilities

- Endor Labs - Common Security Vulnerabilities in AI Code - Specific vulnerability patterns

- DevTech Insights - Trust vs. AI Code - Trust issues and verification strategies

Specialized Research

- arXiv - Survey on Code Generation with LLM Agents - Academic survey on agentic coding approaches